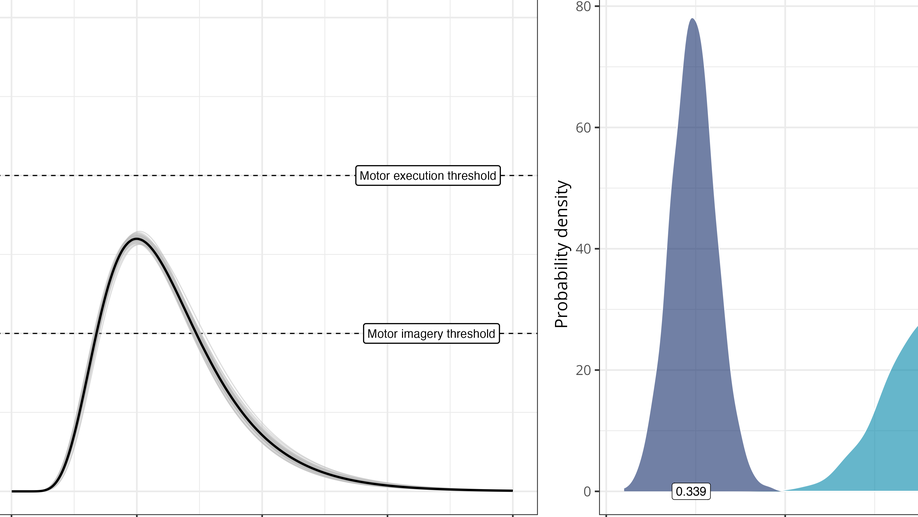

Towards formal models of inhibitory mechanisms involved in motor imagery: a commentary on Bach et al. (2022)

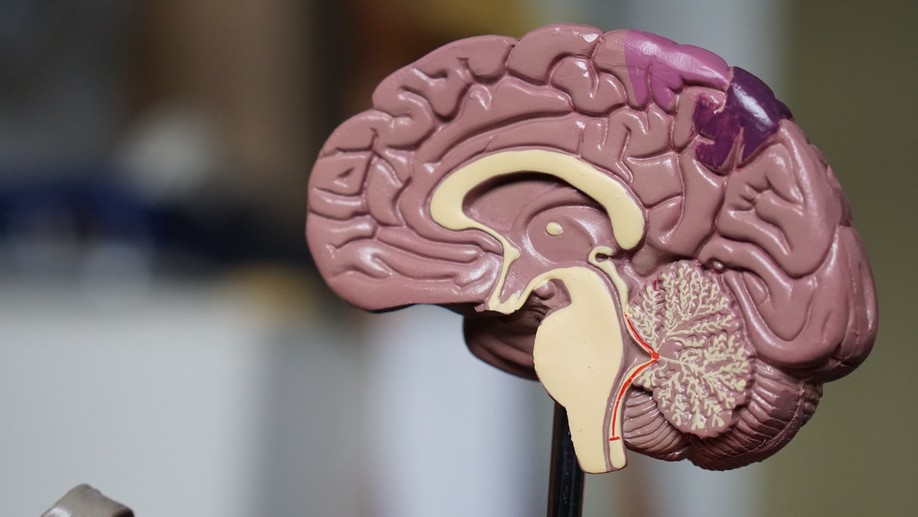

A vast body of research suggests that the primary motor cortex is involved in motor imagery. This raises the issue of inhibition: how is it possible for motor imagery not to lead to motor execution? Bach et al. (2022) suggest that the motor execution threshold may be ‘upregulated’ during motor imagery to prevent execution. Alternatively, it has been proposed that, in parallel to excitatory mechanisms, inhibitory mechanisms may be actively suppressing motor output during motor imagery. These theories are verbal in nature, with well-known limitations. Here, we describe a toy-model of the inhibitory mechanisms thought to be at play during motor imagery to start disentangling predictions from competing hypotheses.

Distinct neural mechanisms support inner speaking and inner hearing

Humans have the ability to mentally examine speech. This covert form of speech production is often accompanied by sensory (e.g., auditory) percepts. However, the cognitive and neural mechanisms that generate these percepts are still debated. According to a prominent proposal, inner speech has at least two distinct phenomenological components: inner speaking and inner hearing. We used transcranial magnetic stimulation to test whether these two phenomenologically distinct processes are supported by distinct neural mechanisms. We hypothesised that inner speaking relies more strongly on an online motor-to-sensory simulation that constructs a multisensory experience, whereas inner hearing relies more strongly on a memory-retrieval process, where the multisensory experience is reconstructed from stored motor-to-sensory associations. Accordingly, we predicted that the speech motor system will be involved more strongly during inner speaking than inner hearing. This would be revealed by modulations of TMS evoked responses at muscle level following stimulation of the lip primary motor cortex. Overall, data collected from 31 participants corroborated this prediction, showing that inner speaking increases the excitability of the primary motor cortex more than inner hearing. Moreover, this effect was more pronounced during the inner production of a syllable that strongly recruits the lips (vs. a syllable that recruits the lips to a lesser extent). These results are compatible with models assuming a central role of the primary motor cortex for inner speech production and contribute to clarify the neural implementation of the fundamental ability of silently speaking in one’s mind.

Time perception: When randomization hurts

Short journal club about the unexpected consequences of randomisation in psychological research.

The role of motor inhibition during covert speech production

Covert speech is accompanied by a subjective multisensory experience with auditory and kinaesthetic components. An influential hypothesis states that these sensory percepts result from a simulation of the corresponding motor action that relies on the same internal models recruited for the control of overt speech. This simulationist view raises the question of how it is possible to imagine speech without executing it. In this perspective, we discuss the possible role(s) played by motor inhibition during covert speech production. We suggest that considering covert speech as an inhibited form of overt speech maps naturally to the purported progressive internalisation of overt speech during childhood. We further argue that the role of motor inhibition may differ widely across different forms of covert speech (e.g., condensed vs. expanded covert speech) and that considering this variety helps reconciling seemingly contradictory findings from the neuroimaging literature.

A fully automated, transparent, reproducible, and blind protocol for sequential analyses

Despite many cultural, methodological, and technical improvements, one of the major obstacle to results reproducibility remains the pervasive low statistical power. In response to this problem, a lot of attention has recently been drawn to sequential analyses. This type of procedure has been shown to be more efficient (to require less observations and therefore less resources) than classical fixed-N procedures. However, these procedures are submitted to both intrapersonal and interpersonal biases during data collection and data analysis. In this tutorial, we explain how automation can be used to prevent these biases. We show how to synchronise open and free experiment software programs with the Open Science Framework and how to automate sequential data analyses in R. This tutorial is intended to researchers with beginner experience with R but no previous experience with sequential analyses is required.

Can we decode phonetic features in inner speech using surface electromyography?

Although having a long history of scrutiny in experimental psychology, it is still controversial whether wilful inner speech (covert speech) production is accompanied by specific activity in speech muscles. We present the results of a preregistered experiment looking at the electromyographic correlates of both overt speech and inner speech production of two phonetic classes of nonwords. An automatic classification approach was undertaken to discriminate between two articulatory features contained in nonwords uttered in both overt and covert speech. Although this approach led to reasonable accuracy rates during overt speech production, it failed to discriminate inner speech phonetic content based on surface electromyography signals. However, exploratory analyses conducted at the individual level revealed that it seemed possible to distinguish between rounded and spread nonwords covertly produced, in two participants. We discuss these results in relation to the existing literature and suggest alternative ways of testing the engagement of the speech motor system during wilful inner speech production.

Action effects on visual perception of distances: A multilevel Bayesian meta-analysis

Previous studies have suggested that action constraints influence visual perception of distances. For instance, the greater the effort to cover a distance, the longer people perceive this distance to be. The present multilevel Bayesian meta-analysis (37 studies with 1,035 total participants) supported the existence of a small action-constraint effect on distance estimation, Hedges’s g = 0.29, 95% credible interval = [0.16, 0.47]. This effect varied slightly according to the action-constraint category (effort, weight, tool use) but not according to participants’ motor intention. Some authors have argued that such effects reflect experimental demand biases rather than genuine perceptual effects. Our meta-analysis did not allow us to dismiss this possibility, but it also did not support it. We provide field-specific conventions for interpreting action-constraint effect sizes and the minimum sample sizes required to detect them with various levels of power. We encourage researchers to help us update this meta-analysis by directly uploading their published or unpublished data to our online repository (https://osf.io/bc3wn/).

An introduction to Bayesian multilevel models using brms: A case study of gender effects on vowel variability in standard Indonesian

Bayesian multilevel models are increasingly used to overcome the limitations of frequentist approaches in the analysis of complex structured data. This paper introduces Bayesian multilevel modelling for the specific analysis of speech data, using the brms package developed in R. In this tutorial, we provide a practical introduction to Bayesian multilevel modelling, by reanalysing a phonetic dataset containing formant (F1 and F2) values for five vowels of Standard Indonesian (ISO 639-3:ind), as spoken by eight speakers (four females), with several repetitions of each vowel. We first give an introductory overview of the Bayesian framework and multilevel modelling. We then show how Bayesian multilevel models can be fitted using the probabilistic programming language Stan and the R package brms, which provides an intuitive formula syntax. Through this tutorial, we demonstrate some of the advantages of the Bayesian framework for statistical modelling and provide a detailed case study, with complete source code for full reproducibility of the analyses.

A Cognitive Neuroscience View of Inner Language: To Predict and to Hear, See, Feel

Inner verbalisation can be willful, when we deliberately engage in inner speech (e.g., mental rehearsing, counting, list making) or more involuntary, when unbidden verbal thoughts occur. It can either be expanded (fully phonologically specified) or condensed (cast in a prelinguistic format). Introspection and empirical data suggest that willful expanded inner speech recruits the motor system and involves auditory, proprioceptive, tactile as well as perhaps visual sensations. We present a neurocognitive predictive control model, in which willful inner speech is considered as deriving from multisensory goals arising from sensory cortices. An inverse model transforms desired sensory states into motor commands which are specified in motor regions and inhibited by prefrontal cortex. An efference copy of these motor commands is transformed by a forward model into simulated multimodal acts (inner phonation, articulation, gesture). These simulated acts provide predicted multisensory percepts that are processed in sensory regions and perceived as an inner voice unfolding over time. The comparison between desired sensory states and predicted sensory end states provides the sense of agency, of feeling in control of one’s inner speech. Three types of inner verbalisation can be accounted for in this framework: unbidden thoughts, willful expanded inner speech, and auditory verbal hallucination.

Orofacial electromyographic correlates of induced verbal rumination

Rumination is predominantly experienced in the form of repetitive verbal thoughts. Verbal rumination is a particular case of inner speech. According to the Motor Simulation view, inner speech is a kind of motor action, recruiting the speech motor system. In this framework, we predicted an increase in speech muscle activity during rumination as compared to rest. We also predicted increased forehead activity, associated with anxiety during rumination. We measured electromyographic activity over the orbicularis oris superior and inferior, frontalis and flexor carpi radialis muscles. Results showed increased lip and forehead activity after rumination induction compared to an initial relaxed state, together with increased self-reported levels of rumination. Moreover, our data suggest that orofacial relaxation is more effective in reducing rumination than non-orofacial relaxation. Altogether, these results support the hypothesis that verbal rumination involves the speech motor system, and provide a promising psychophysiological index to assess the presence of verbal rumination.